This website accompanies the IWAENC 2024 submission "DSP-Informed Bandwidth Extension Using Locally-Conditioned and Linear Time-Varying Filter Subnetworks." Here, we provide listening examples of the approaches described in the paper.

Abstract

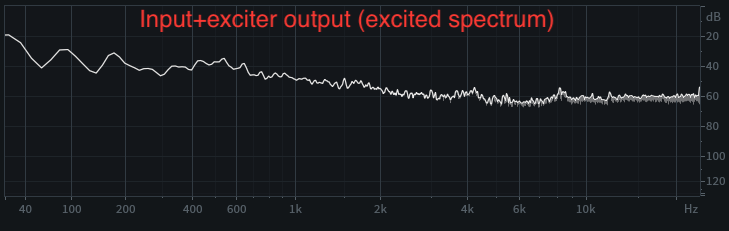

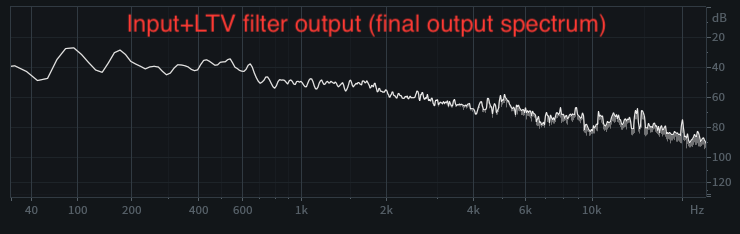

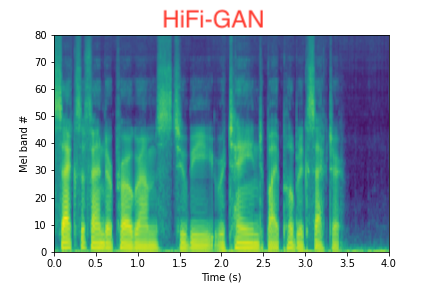

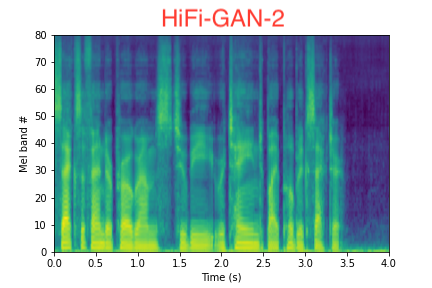

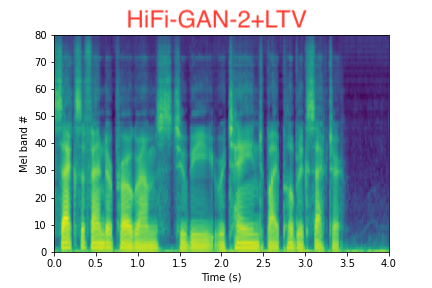

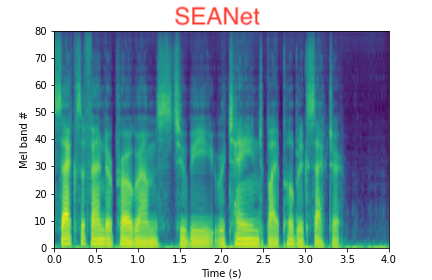

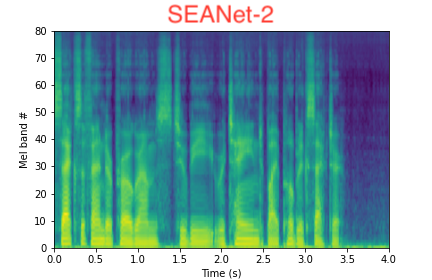

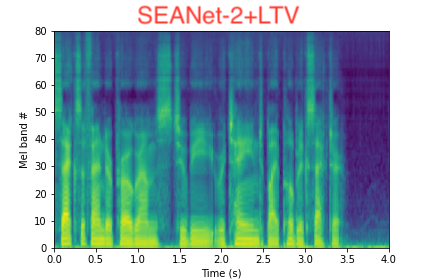

In this paper, we propose a dual-stage architecture for bandwidth extension (BWE) increasing the effective sampling rate of speech signals from 8 kHz to 48 kHz. Unlike existing end-to-end deep learning models, our proposed method explicitly models BWE using excitation and linear time-varying (LTV) filter stages. The excitation stage broadens the spectrum of the input, while the filtering stage properly shapes it based on outputs from an acoustic feature predictor. To this end, an acoustic feature loss term can implicitly promote the excitation subnetwork to produce white spectra in the upper frequency band to be synthesized. Experimental results demonstrate that the added inductive bias provided by our approach can improve upon BWE results using the generators from both SEANet or HiFi-GAN as exciters, and that our means of adapting processing with acoustic feature predictions is more effective than that used in HiFi-GAN-2. Secondary contributions include extensions of the SEANet model to accommodate local conditioning information, as well as the application of HiFi-GAN-2 for the BWE problem.

Audio Examples

All examples are generated using the VCTK corpus [1].

| Lo-res | Hi-res | HiFi-GAN | HiFi-GAN-2 | HiFi-GAN-2+LTV | SEANet | SEANet-2 | SEANet-2+LTV | |

| Sample 1 | ||||||||

| Sample 2 | ||||||||

| Sample 3 | ||||||||

| Sample 4 | ||||||||

| Sample 5 | ||||||||

| Sample 6 | ||||||||

| Sample 7 | ||||||||

| Sample 8 |

[1] C. Veaux, J. Yamagishi, and K. MacDonald, CSTR VCTK corpus: English multi-speaker corpus for CSTR voice cloning toolkit, The Centre for Speech Technology Research (CSTR), University of Edinburgh, Edinburgh, 0.8.0 edition, 2012.

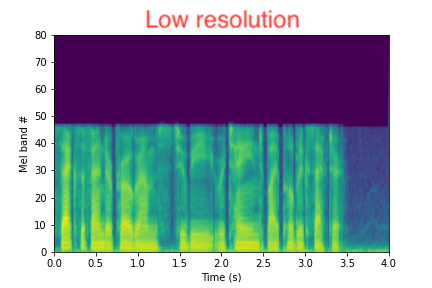

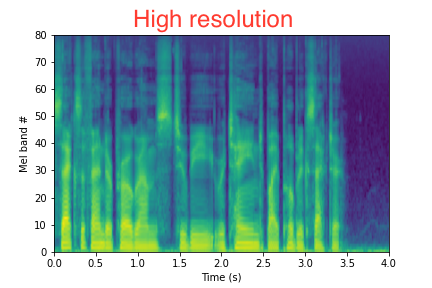

Spectrograms

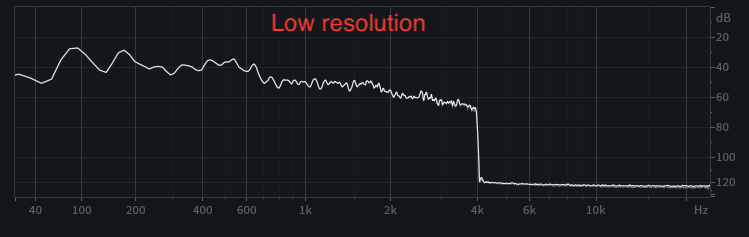

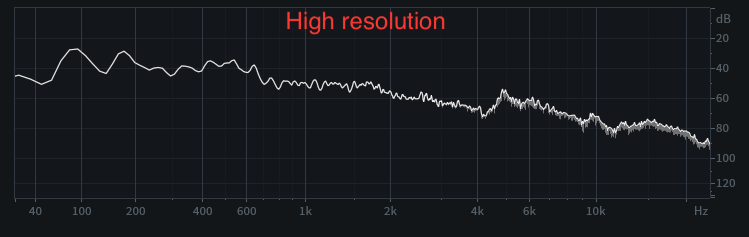

1D Spectra